Introduction

Creating an Amazon Elastic Kubernetes Service (EKS) cluster can be a complex task, but using Terraform simplifies the process and allows for easy management and scaling of the cluster. In this blog post, we will walk through the process of creating an EKS cluster using Terraform, and provide tips and best practices for working with EKS and Terraform.

Why EKS a great choice for running Kubernetes clusters on AWS?

EKS is a managed Kubernetes service that makes it easy to deploy, scale, and manage containerized applications using Kubernetes. It eliminates the need to provision and manage the underlying infrastructure, and allows for easy scaling of the cluster.

Benefits of using Terraform to create an EKS cluster.

One of the main benefits is the ability to version control infrastructure, which allows for easy rollbacks in case of errors or issues. Additionally, Terraform allows for easy management of the cluster by making it easy to make changes to the cluster and automate the provisioning process.

Before we dive into the process of creating an EKS cluster using Terraform, there are a few prerequisites that must be met:

AWS account

IAM user with necessary permissions

Terraform version v1.1.9 or later

AWS CLI version 2 or later

Now, let's start by setting up the necessary AWS resources. We will create a VPC and Subnet using Terraform. Here is an example of how to create a VPC and Subnet using Terraform:

"kubernetes.io/cluster/eks" = "shared" and "kubernetes.io/role/elb" = 1 these tags are provided as they allow eks to discover particular subnet and use these subnets for launching load balancer.

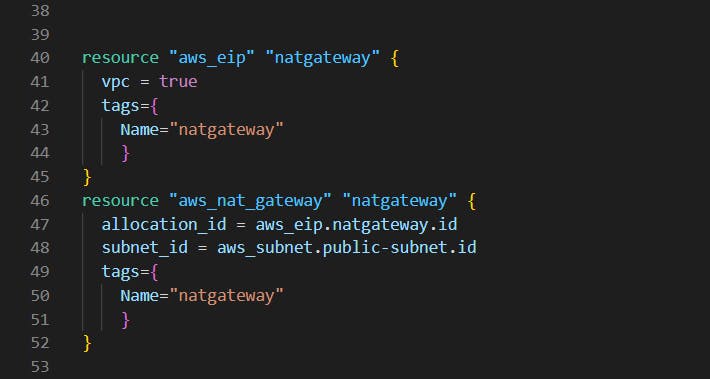

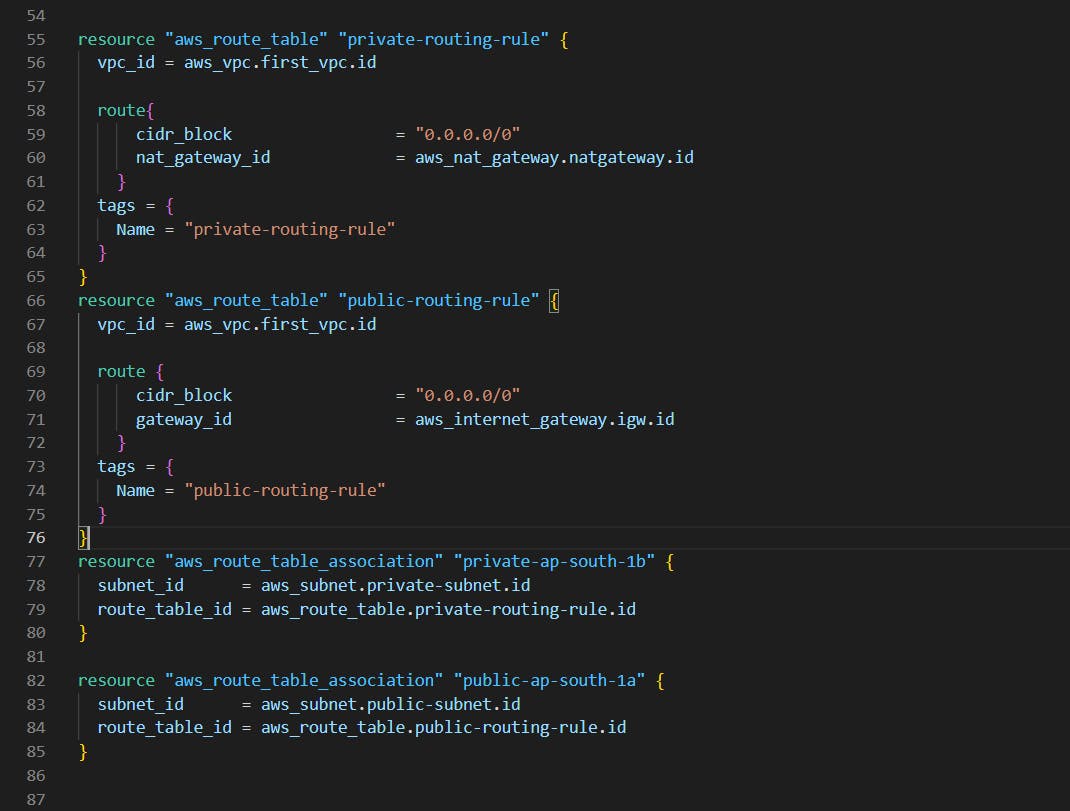

Creating NAT Gateway to provide internet access for private subnets.

Creating rules in the Routing table and attaching them to the subnet.

Creating a role for EKS, attaching it to the cluster and creating cluster name "eks".

resource "aws_iam_role" "eks_role"

name = "eks_role"

assume_role_policy = <<POLICY

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Principal": {

"Service": "eks.amazonaws.com"

},

"Action": "sts:AssumeRole"

}

]

}

POLICY

}

resource "aws_iam_role_policy_attachment" "eks_cluster_policy" {

# The ARN of the policy you want to apply

policy_arn = "arn:aws:iam::aws:policy/AmazonEKSClusterPolicy"

# The role to which policy should be applied

role = aws_iam_role.eks_role.name

}

resource "aws_eks_cluster" "eks" {

# Name of the cluster.

name = "eks"

# The Amazon Resource Name (ARN) of the IAM role that provides permissions for

# the Kubernetes control plane to make calls to AWS API operations on your behalf

role_arn = aws_iam_role.eks_role.arn

# Desired Kubernetes master version

version = "1.24"

vpc_config {

# Indicates whether or not the Amazon EKS private API server endpoint is enabled

endpoint_private_access = false

# Indicates whether or not the Amazon EKS public API server endpoint is enabled

endpoint_public_access = true

# Must be in at least two different availability zones

subnet_ids = [

aws_subnet.public-subnet.id,

aws_subnet.private-subnet.id,

]

}

# Ensure that IAM Role permissions are created before and deleted after EKS Cluster handling.

depends_on = [

aws_iam_role_policy_attachment.eks_cluster_policy

]

}

Creating a role for the Node group and attaching it to the Node Group with two nodes.

# Create IAM role for EKS Node Grou

resource "aws_iam_role" "worker_nodes_role" {

name = "worker_nodes_role"

assume_role_policy = <<POLICY

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Principal": {

"Service": "ec2.amazonaws.com"

},

"Action": "sts:AssumeRole"

}

]

}

POLICY

}

# Resource: aws_iam_role_policy_attachment

resource "aws_iam_role_policy_attachment" "eks_worker_node_policy" {

# The ARN of the policy you want to apply.

policy_arn = "arn:aws:iam::aws:policy/AmazonEKSWorkerNodePolicy"

# The role the policy should be applied to

role = aws_iam_role.worker_nodes_role.name

}

resource "aws_iam_role_policy_attachment" "eks_cni_policy" {

policy_arn = "arn:aws:iam::aws:policy/AmazonEKS_CNI_Policy"

role = aws_iam_role.worker_nodes_role.name

}

resource "aws_iam_role_policy_attachment" "ec2_container_registry_read_only" {

policy_arn = "arn:aws:iam::aws:policy/AmazonEC2ContainerRegistryReadOnly"

role = aws_iam_role.worker_nodes_role.name

}

# Resource: aws_eks_node_group

resource "aws_eks_node_group" "worker_nodes" {

# Name of the EKS Cluster.

cluster_name = aws_eks_cluster.eks.name

# Name of the EKS Node Group.

node_group_name = "worker_nodes"

# Amazon Resource Name (ARN) of the IAM Role that provides permissions for the EKS Node Group.

node_role_arn = aws_iam_role.worker_nodes_role.arn

# Identifiers of EC2 Subnets to associate with the EKS Node Group.

# These subnets must have the following resource tag: kubernetes.io/cluster/CLUSTER_NAME

# (where CLUSTER_NAME is replaced with the name of the EKS Cluster).

subnet_ids = [

aws_subnet.private-subnet.id,

aws_subnet.private-subnet.id

]

# Configuration block with scaling settings

scaling_config {

# Desired number of worker nodes.

desired_size = 2

# Maximum number of worker nodes.

max_size = 3

# Minimum number of worker nodes.

min_size = 1

}

# Type of Amazon Machine Image (AMI) associated with the EKS Node Group.

ami_type = "AL2_x86_64"

# Type of capacity associated with the EKS Node Group.

# Valid values: ON_DEMAND, SPOT

capacity_type = "ON_DEMAND"

# Disk size in GiB for worker nodes

disk_size = 5

# Force version update if existing pods are unable to be drained due to a pod disruption budget issue.

force_update_version = false

# List of instance types associated with the EKS Node Group

instance_types = ["t2.micro"]

labels = {

role = "worker_nodes"

}

# Kubernetes version

version = "1.24"

# Ensure that IAM Role permissions are created before and deleted after EKS Node Group handling.

# Otherwise, EKS will not be able to properly delete EC2 Instances and Elastic Network Interfaces.

depends_on = [

aws_iam_role_policy_attachment.eks_worker_node_policy,

aws_iam_role_policy_attachment.eks_cni_policy,

aws_iam_role_policy_attachment.ec2_container_registry_read_only,

]

}

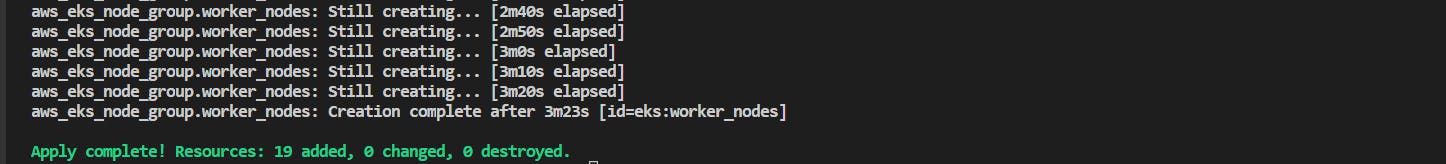

Now, creating creating cluster using this terraform code.

# terraform init cmd to initialize the working

terraform init

# terraform apply to create required infrastructure

terraform apply

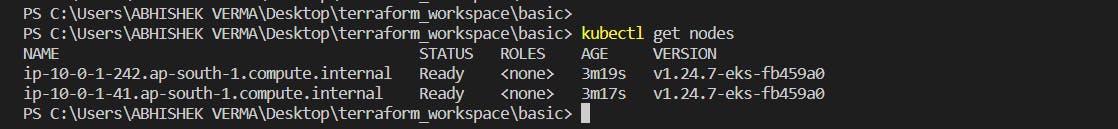

When you use terraform to create a cluster, you need to update your Kubernetes context manually.

aws eks update-kubeconfig --name eks --region ap-south-1

Then checking our nodes

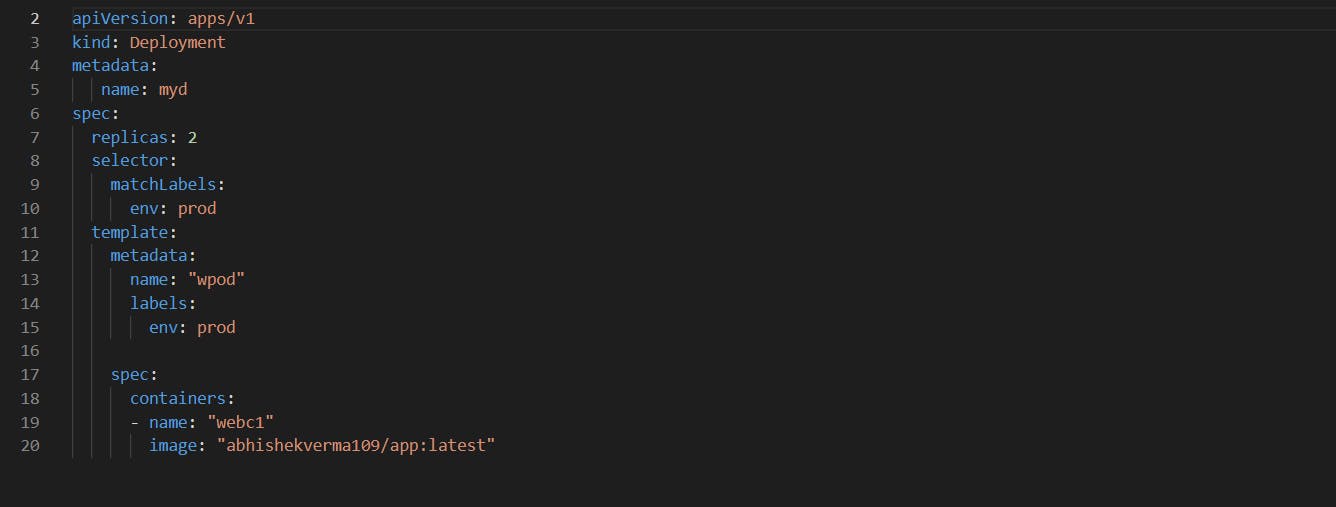

Deploying App on Kubernetes

File to deploy an app on Kubernetes

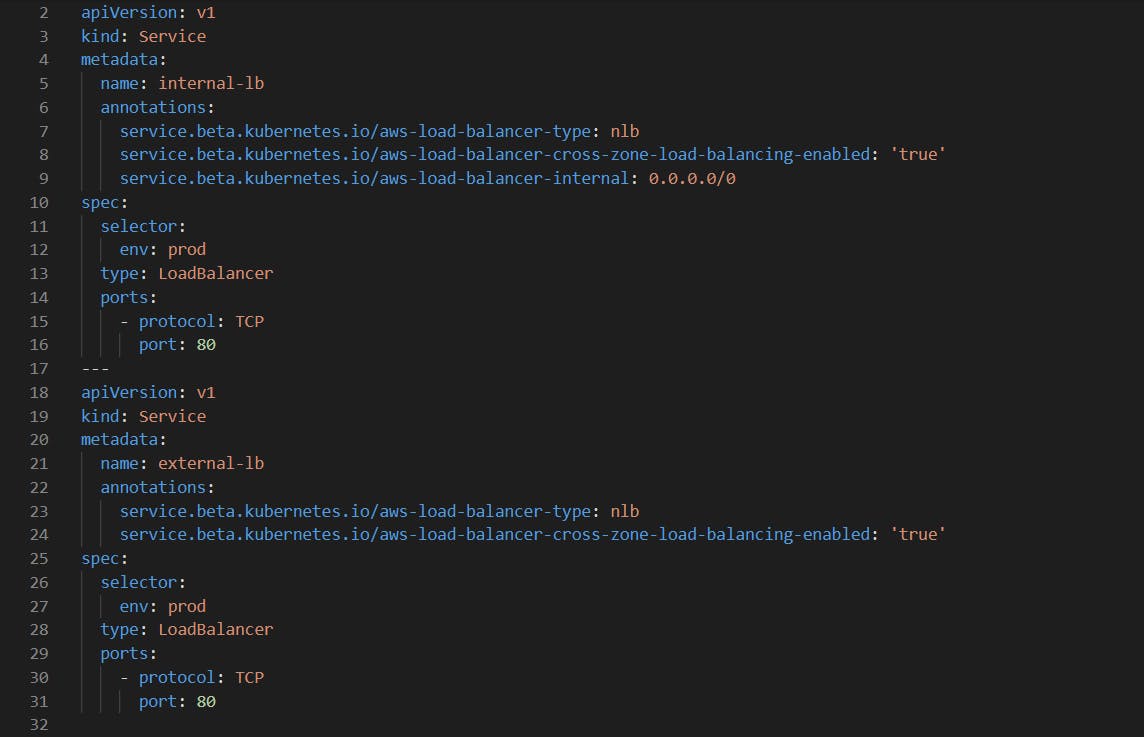

Now exposing our app with NLB one in private subnet and one public subnet.

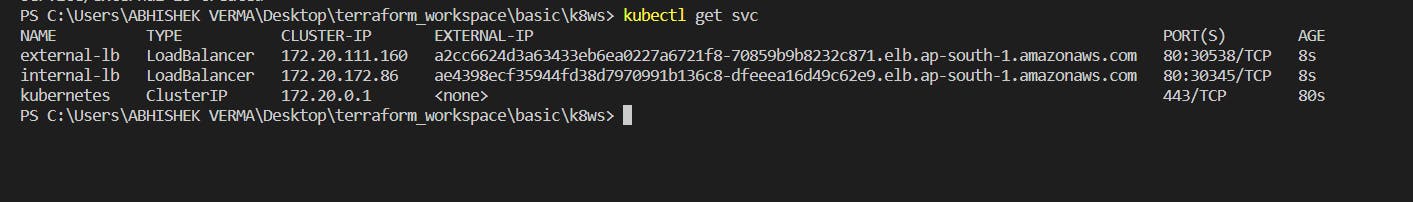

Checking our service.

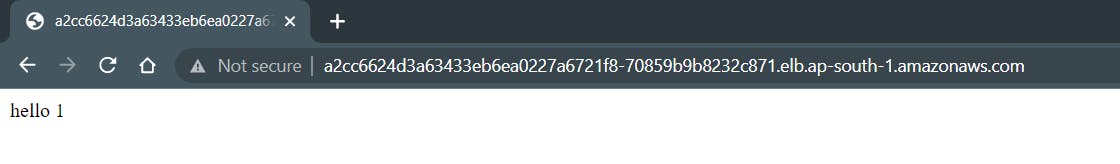

Our app exposes on external-lb.

Thank you for reading.